The right data at the right time in the right place, prepared appropriately for the respective recipients. This is how one could describe the ideal vision of a functioning business intelligence infrastructure. In reality, achieving this often requires a large number of system components that have to harmonise with each other at the right pace. As the number of source systems and consumers of an analysis data warehouse increases, the coordination and monitoring of status and timeliness of processes alone becomes a challenge. An orchestration service such as Apache Airflow can be the right tool for maintaining an overview. Airflow not only offers a wide range of functions for controlling, securing and tracking planned tasks, but can also be operated free of licensing costs as an open source project. But for many companies, the question quickly arises: Can Apache Airflow be operated on a Windows server? Today we would like to present the possibilities, advantages and disadvantages.

We keep coming across companies that are looking for a suitable service for the definition and management of workflows and data pipelines. NextLytics strongly recommends Apache Airflow. Airflow relies entirely on a code-based approach for the definition of workflows and processes, which creates great reliability and enables a fully traceable operating process for this beating heart of a business intelligence infrastructure. Airflow continues to focus on extensibility, is anchored in Python, the most popular programming language of our time, and is highly customisable with countless extension modules. But that Apache Airflow is primarily designed for operation on Linux operating systems, in a container virtualisation platform or in the cloud is sometimes a hurdle: Data should only be processed in the company's own data centre or there is not yet a cloud strategy ready for implementation. And finally: the in-house IT department only offers Windows Server as an operating system.

Apache Airflow and Windows: the possibilities

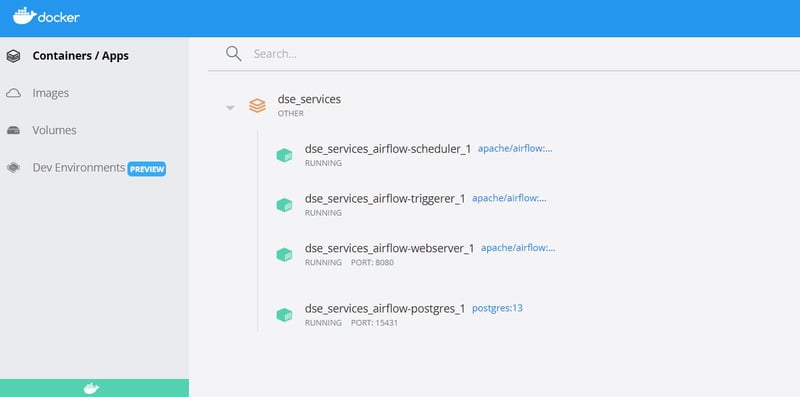

Apache Airflow is based on a micro-services architecture, i.e. different, individually scalable services take on different tasks and communicate with each other via web service interfaces. Such an architecture can be operated with little effort using container virtualisation, provided the appropriate infrastructure is available. A virtual server with Docker Engine is often sufficient for small to medium-sized systems. More powerful systems feel particularly at home in a Kubernetes cluster. Docker in particular has gone to great lengths in recent years to be able to run as a virtualisation layer on Windows Server operating systems. At first glance, the case seems clear: deploy Windows Server with Docker Engine, roll out the Airflow containers and you're ready to go.

In practice, you are quickly confronted with different variants and problems. The most common operating variants for Airflow using Docker on a Windows system are

- Operation of the Docker Engine using Docker Desktop

- Operation of a Docker Engine in a virtual Linux environment provided via "Windows Subsystem for Linux" (WSL)

There are numerous instructions and best practices for both variants on the Internet, but beware: it doesn't work really smoothly!

Apache Airflow with Docker Desktop

Probably the easiest way to run Airflow on a Windows operating system is to use the "Docker Desktop" programme. Docker Desktop provides a graphical user interface to activate the Docker Engine and start Docker containers. The current version of Docker Desktop uses the Windows Subsystem for Linux v2 to set up a virtual Linux environment in the background and run the Docker Engine in it, completely unnoticed by the user. The command line interface of the Docker Engine is then passed through to the Windows operating system and can be accessed directly from Powershell using familiar commands such as "docker run" or "docker compose".

In this variant, integration into the Windows server is as seamless as possible. Base directories for a compose-based installation of Airflow are located directly in the Windows file system and can be managed with the usual tools such as Powershell, Git for Windows and Explorer.

Another big plus point is that Docker Desktop automates port forwarding between the operating system and Docker containers, so there is no additional effort or configuration required. Continuous Integration and Continuous Delivery (CI/CD) operations of Airflow can be facilitated with the toolset of choice, be it Gitlab CI/CD or Azure DevOps Pipelines.

Docker Desktop is designed as a graphical programme that is executed by a logged-in user. If Apache Airflow is to be operated as a permanently running service, this is initially a hindrance. However, the desired behaviour can still be achieved using an additional start script that is executed via Windows Service Management when the server is started. The Docker Engine can also be set up using configuration parameters so that it is started automatically on reboot.

In this variant, our tests have shown that integrating Airflow directories from the Windows file system into the Docker containers is problematic. Obviously, file locking mechanisms are negotiated differently than on Linux-based servers, so that the import of Airflow DAG definition files is prone to errors. It is therefore advisable to configure Airflow Docker images in such a way that the directories usually munted from the host system are fully included in the image.

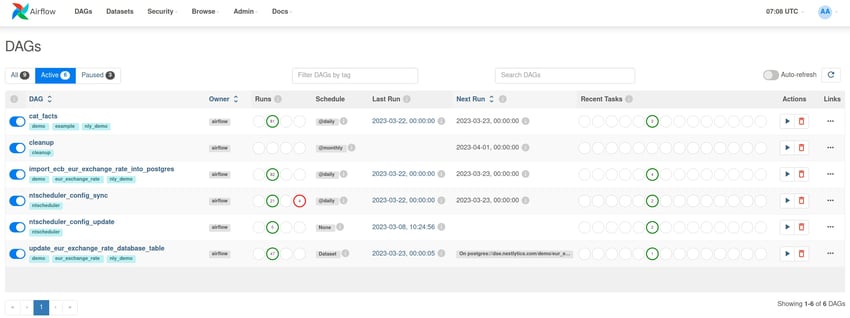

Effective workflow management with Apache Airflow 2.0

What is the obstacle to operating Apache Airflow in this way? None from a technical point of view, only the fact that Docker Desktop is a product that requires a licence. For test operations and small companies, use can be free of charge. From a certain annual turnover or more than 500 employees, however, licences must be purchased. Prices start at between 60 and a few hundred Dollars per year for a few users.

Is there another way to run the open source system Airflow on Windows Server without additional licence costs then? We have also tested the following variant for you:

Apache Airflow with WSL

The Windows Subsystem for Linux has been integrated into Microsoft's operating systems practically across the board for several years now. It enables the operation of lightweight virtual operating system environments based on Linux within a running Windows operating system. Shouldn't it also be possible to run Apache Airflow using Docker in such a WSL environment completely like on a native Linux server?

We have tested the variant with an Ubuntu WSL environment. The WSL environment is created according to the usual instructions and controlled via the command line, just like a fully-fledged Ubuntu server. The Docker Engine in the WSL environment is installed in the usual way and a standard local operating environment for Apache Airflow with Git and docker-compose is prepared.

This variant becomes complicated at the interface between the Windows operating system and the WSL Linux environment. WSL environments are designed to be started and operated by a logged-in user at runtime. The automatic start with the host operating system is not initially provided for and must be constructed using scripts. There is also no integration of user IDs between the two environments, which means that the WSL environment must have its own local user management. Finally, there is also no communication between the host operating system and the Docker Engine in the WSL environment, meaning that port releases have to be set manually.

The conclusion of our tests for this variant is that the Linux operating system expertise required is even greater than for the operation of a native server. The number of complex workarounds due to the lack of automatic integration with the Windows server operating system also speaks against a serious productive operation of Airflow.

In a comparison of the two most promising options for installing Apache Airflow on Windows Server operating systems, Docker Desktop is the clear winner despite the additional licence costs. Nevertheless, this variant is not ideal, as it is more optimised for testing and developing systems in single-user mode.

The alternatives

Based on our many years of experience, we still recommend that even customers with a Windows server landscape run Apache Airflow on a native Linux operating system. The integration of established distributions such as Ubuntu, Red Hat Enterprise Linux or OpenSuse with Microsoft Active Directory domains is very mature and supported out of the box these days. Identity management and access control can therefore also be easily transferred to a Linux server. Technical support for the operating system itself is available as a service from many providers; we ourselves are happy to offer this service directly as part of Airflow support contracts. Operating the Docker Engine from the Airflow system environment itself eliminates many workarounds and stable operation has been tested and proven countless times worldwide.

If compliance does not allow for a server with a Linux operating system under in-house responsibility, obtaining Apache Airflow as a cloud service is a good alternative. There are turnkey offerings from Amazon Web Services ("Amazon Managed Workflows for Apache Airflow", MWAA) or Google Cloud Platform ("Cloud Composer"). If the requirements are more specialised or if direct contact and customer-oriented technical support are desired, NextLytics is also happy to operate an Airflow instance on the infrastructure of your choice. In any case, a cloud strategy and definition of data protection guidelines are required.

Apache Airflow on Windows Server - Our Conclusion

As an orchestration service for data warehouse and business intelligence platforms, Apache Airflow offers many advantages that are just as popular with companies with a Windows-based server landscape. Running Airflow directly on Windows Server operating systems is possible in principle, but always involves complex technical tricks and/or additional licence costs. Apache Airflow works best on Linux servers or directly as a cloud service and can usually also be integrated well into Windows environments in these variants. We will be happy to advise you on the best solution - NextLytics would like to open up the possibilities of Apache Airflow to everyone.

/Logo%202023%20final%20dunkelgrau.png?width=221&height=97&name=Logo%202023%20final%20dunkelgrau.png)