The PyCon DE and PyData Berlin conferences are two events organized and held annually by the Python community. While the PyCon DE agenda covers the entire Python ecosystem, PyData Berlin focuses on data analytics topics. This year the two conferences were organized together for the first time and took place from October 9th to 11th, 2019 at the event location Kosmos in Berlin. In addition to technical lectures, keynotes and workshops, there were also interactive program items such as community sprints.

Together with my colleague I attended the conference and took this opportunity for exchange, training and networking. In addition to general Python topics, there was a strong tendency towards data analytics topics of the PyData Berlin. In this blog post, I report on our impressions of the conference and present what we consider to be the most interesting topics, contributions and highlights:

In addition to contributions on specialized machine learning areas, such as time series models, automated feature engineering and Bayesian methods, there were also contributions on data visualization, which we found very exciting. In this context, two presentations on the Python modules Dash and Panel can be mentioned.

Data Visualization with Dash and Panel

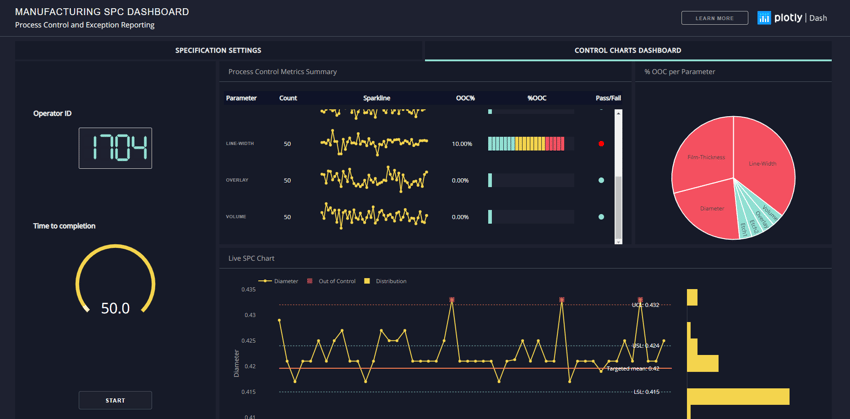

The Dash module is now available in a first official non-Beta release. With Dash it is possible to create interactive WebApps in Python without using JavaScript. The big advantage: Machine learning and data science users who frequently develop in Python do not have to learn an additional coding language or complex frameworks. At the same time, it's easier to access the modules and data used within Python, so that dynamic visualizations and dashboards can be developed more quickly.

Probably the most important flaw of Dash is a lower performance compared to JavaScript-based WebApps. Furthermore, the Enterprise version of Dash is licensed and, unlike other Python visualization tools, proprietary and liable to costs. Nevertheless, Dash is particularly suitable as a rapid prototyping tool for visualizations and interfaces that can contribute to increased development productivity.

Similar to Dash, the Panel module is also used for data visualization. Compared to Dash, Panel is more "Pythonic", i.e. more based on the usual syntax and workflows of Python. At the same time, Panel is also linked to a larger number of other modules from the Python ecosystem and is distinguished by its intuitive usability.

Overall, we consider Panel to be a very interesting approach for creating interactive and dynamic visualizations for machine learning and data analytics applications with little additional effort. Unfortunately, Panel is still in a pre-release phase, so that it is (still) not advisable to use it in a productive context.

MLOps - DevOps for Machine Learning

Probably the hottest topic this year was MLOps and the deployment of machine learning models in productive environments. MLOps is a generic term from "Machine Learning" (ML) and "DevOps". DevOps is an approach aimed at improving development and IT operations through process optimization, tools and agile approaches. While DevOps originally comes from the "classical" software development and is supposed to lead to error, time and cost savings, MLOps refers to an optimization of the development and operation of machine learning and data analytics applications. In this sense, MLOps can be understood as a sub-area of DevOps, which deals especially with machine learning specific problems and characteristics.The entire product lifecycle of a data analytics or machine learning comprises a multitude of steps and is too often reduced to individual partial aspects of the entire process. While many machine learning models and applications are implemented as proof-of-concept within a few weeks or months, the majority of these applications probably never make the leap into a productive environment. The following list contains a selection of reasons for this surprisingly widespread problem:

- Lack of scalability due to lack of and inappropriate IT resources

- High time and personnel expenditure for data provision and cleansing, orchestration of workflows as well as monitoring and preparation of results due to lack of interfaces and automation

- Data Scientists are not trained software developers and lack knowledge of test management and DevOps practice

- Difficult collaboration within data science teams: no source code management, incompatible software versions and no common development environment

In order to face these problems, tools from the classic DevOps area are also used. Particularly popular in this context are docker containers, which enable a simple, platform-independent deployment of ML & Data Analytics applications. Deployment via docker containers is also very well scalable and robust with the help of platform solutions such as Kubernetes or platforms based on them such as RedHat OpenShift. The necessary orchestration and automation of complex workflows can be greatly simplified by tools such as Jenkins or Apache Airflow.

Meanwhile there are also the first specific MLOps tools like MLflow and Kubeflow, which try to support the entire product life cycle of ML applications. We found the lecture to the newly published module Kedro particularly interesting in view of this.

Support of the product life cycle of ML applications & data pipelines with Kedro

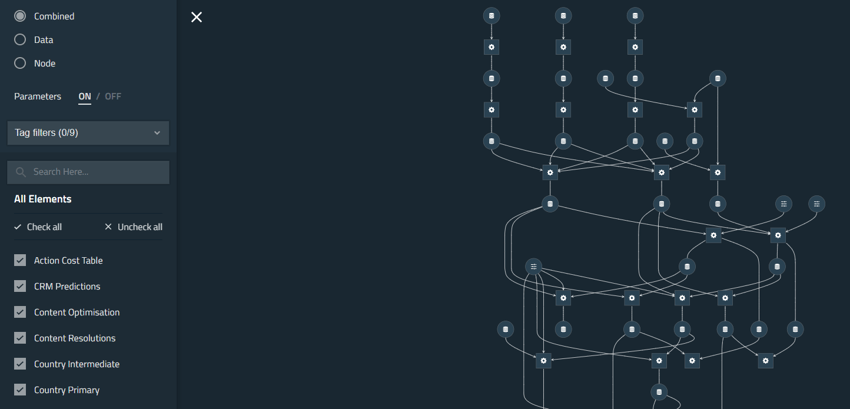

Kedro was initially developed as a proprietary Python module for customers of McKinsey subsidiary QuantumBlack, but was released as an open source module for the entire community due to positive customer feedback. Kedro's goal is to simplify and accelerate the development and productive operation of machine learning applications and data pipelines, thus making them more efficient. In order to achieve this, Kedro helps to standardize the ML application development process and to adopt frequent development steps completely. At the same time, Kedro provides extensions for easy deployment of an ML application in a docker container (Kedro-Docker) or for workflow orchestration by Apache Airflow (Kedro-Airflow). Furthermore, a powerful visualization tool (Kedro-Viz) is included with which complex processes can be mapped automatically and comprehensibly.

Overall, Kedro is following the problem already described, namely that the transition of an application from development to productive operation is difficult and takes a lot of time. We think that Kedro makes a very promising impression and is a possible tool within a MLOps approach.

Algorithmic Decision Systems and Ethics - Algo.Rules

Apart from topics that are purely technically motivated, there were also lectures at PyConDE / PyData Berlin 2019 that focused on various social aspects. One example is the lecture "Algo.Rules - How do we get the ethics into the code?", which focuses on the ethics and regulation of algorithms.

Algo Rules is a set of rules conceived by the Bertelsmann Stiftung for the acceptable design of algorithmic decision-making systems, consisting of nine guiding principles. At its core, these rules are motivated by the fact that machine-supported decision-making systems can bring not only opportunities, but also risks and problems. One negative example is the case of an Amazon AI recruiting algorithm, which initially unnoticed developed an unwanted (and unfounded) bias at the expense of female applicants.

In addition to ethical aspects, the Algo Rules are also motivated by legal questions. If algorithms make important decisions, such as a decision about a person's creditworthiness, who is legally responsible for any errors or damage that may occur? And: To what extent must such algorithmic decisions also be comprehensible and understandable for people? An exciting topic, as we think.

PyCon DE / PyData Berlin 2019 - Our Conclusion

All in all, our impressions of PyCon DE / PyData Berlin were very positive. The only negative point from our point of view was that the venue was relatively small compared to the number of visitors. The consequence was that some rooms were so crowded that not all visitors could be admitted. Despite this small shortcoming, we can warmly recommend the conference to all Python users in the data analytics environment and look forward to PyCon DE / PyData Berlin next year.

Note: Many of the presentations will be put online by the organizers after some time. As soon as this has been done, we will add a list of links to some of the presentations we think are worth watching.