The Apache Airflow workflow management platform not only provides a comprehensive web interface for managing and monitoring automation created in the form of workflows, but also enables further application ideas with the expanded REST API. In particular, developers from the fields of business intelligence, data engineering and machine learning can use the REST API to design workflow management according to their wishes. In this blog post, we will introduce you to Airflow's API and give you important tips regarding the secure configuration of Airflow and the usage of the endpoints. Also, let our application ideas inspire you to implement them on your own.

Application Ideas

First, we would like to introduce you to some application scenarios enabled by Apache Airflow's developed API:

- On-demand workflow launching

Even when the experimental API served as the only external access point, a main use case was to start workflows after events in external systems and processes. In the machine learning domain, for example, a retraining is started if the performance of the productive model slips below a threshold. However, starting - detached from a fixed execution interval - can also be solved via TimeTables since Airflow 2.2.

- Changing variables in the course of error recovery

If the workflows in Airflow are designed in such a way that the target system of the data workflows is selected via an abbreviation in an internal variable, the corresponding variable can be reset with an API request in the event of an error in order to redirect the traffic into another system for a short term error handeling.

- Automatic user management

User profiles can be created (POST), retrieved (GET) and modified (PATCH) via the API. This is the prerequisite for automatic user management, in which, for example, initial user creation on both the test and production systems takes place via the API. To link Airflow to your central user directory, it is not necessary to program custom integration scripts against the REST API. For this purpose, a wide variety of standardized authentication protocols can be configured directly via the underlying Flask framework

- Basis for advanced applications

- Synchronous and asynchronous system linking

The two-way linking of systems can be easily realized via the API. Thus, the process chains in SAP BW and the workflows in Airflow can be linked both asynchronously and synchronously. You can read more about this in our blog post on the topic.

How are these application ideas implemented with Apache Airflow? In the next section, we will look at the technical context of the RESTful API.

Design and configuration of the REST API

With the Airflow Upgrade, Apache Airflow 2.0 (December 2020), the API has outgrown its experimental status and is suitable for production use. This means that future developments of the API will not affect the existing endpoints. The specification used is OpenAPI 3.

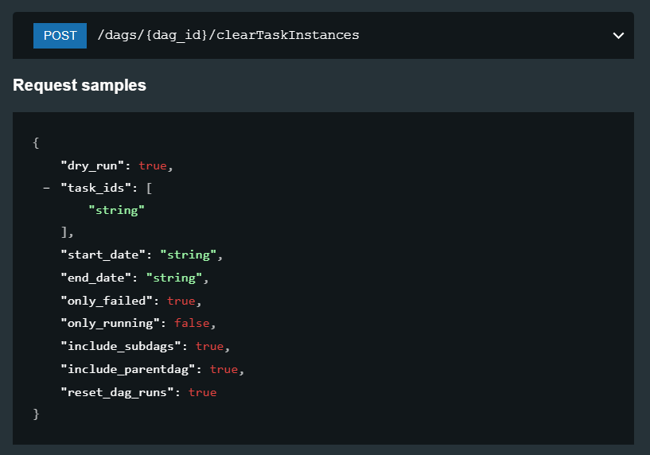

The output of an API request returns information in JSON format, while the stored endpoints also expect the input as a JSON object.

Deleting task instances as an example POST endpoint and schema of required information like the date range (start_date and end_date)

Documentation

As the API has evolved, not only has the number of endpoints increased, but so has the quality of the documentation. While the experimental API faced developers with a significant amount of try-and-error to correctly pass required input information and efficiently reuse the output, the documentation of Airflow's new API fully reflects every endpoint.

Download the whitepaper and discover the potential of

Apache Airflow

Security

To use the REST API, some settings must be made in the configuration file of the Airflow installation. An authentication backend (AUTH_BACKEND) must be specified to enable it. The default setting airflow.api.auth.backend.deny_all rejects all requests by default. In addition, known options for authentication are available. For example, Kerberos or basic authentication via the users in the Airflow DB can be selected. When Airflow user management is associated with an OAuth2 directory service, higher level token-based authentication and authorization mechanisms can also be used for the REST API.

The excellent customizability of Airflow is ensured by the fact that you can create and store your own backend here as required. With the setting enable_experimental_api = True the deprecated API can be activated. However, apart from compatibility with Airflow 1, there are no further benefits as the endpoints are all included in the revised API.

Endpoints

The available endpoints describe almost all functions provided by the Airflow web interface and CLI. Tasks (Tasks), workflows (DAGs), users, variables and connections are flexibly managed through the API. In the following section, some common endpoints and a concrete code example for using the API will be presented.

Using the API

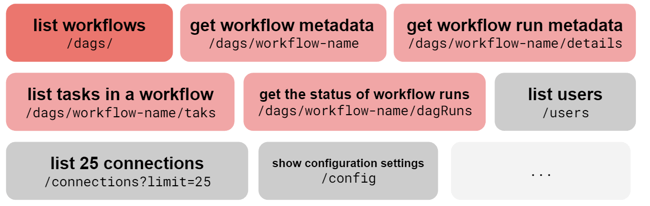

Finally, the endpoints and the usage of the API in the code sample will be presented. Since the list of endpoints covers almost the full functionality of Airflow, we would like to give you an overview of the most important endpoints. The documentation of the API interface contains detailed information about the expected input of the PATCH and POST endpoints.

GET requests

Here especially information about the general workflows, workflow runs, tasks, variables, users and connections are returned. But also the log files and the configuration file, as well as the status query of the scheduler and the web server are accessible here.

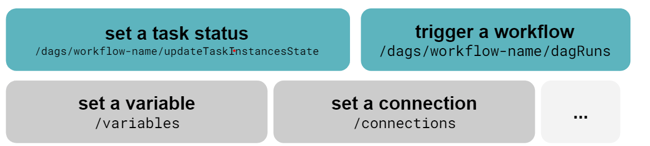

POST requests

To perform actions remotely in Apache Airflow, many POST endpoints are available. Here you can start a workflow, create new connections and variables, and set the status of tasks.

In addition to the GET and POST requests, there are also a variety of PATCH requests, which you can find in the official documentation.

If the use of the API is enabled in the settings of Airflow, the API call is possible via any system that supports HTTP requests. For the first attempts the program Postman is suitable or you can modify and execute the following code example in the programming language Python. Here you have to specify the URL of your Airflow instance and authorized user data.

|

import requests from requests.auth import HTTPBasicAuth # user in airflow db or defined backend auth = HTTPBasicAuth(user, pw) # add additional parameters data = {"conf": dag_config} r = requests.post(url + '/api/v1/dags/ExampleWorkflow/dagRuns', auth=auth, headers=headers, json=data) # save dag_run_id if successfull request if r.json()["state"] == "success": dag_id = r.json()["dag_run_id"] |

In summary, Apache Airflow's stable API provides a secure and comprehensive basis for exciting use cases and convinces with increased usability and customizability of the underlying settings.

Do you have further questions about Apache Airflow as a workflow management system or do you need an implementation partner for exciting use cases?

We are happy to support you from problem analysis to technical implementation.

Machine Learning, Apache Airflow