Just in time for the annual closing, the eagerly awaited major update of the workflow management platform Airflow arrives. The new version of Apache Airflow 2.0 rewards long-time users with even faster execution of their workflows, while newcomers benefit from increased usability in many areas. The open-source workflow management platform with excellent scalability remains free to use at all times under the Apache License.In this article, we present the most important changes to help you achieve state-of-the-art workflow management. If you would like to understand Apache Airflow from the ground up, we recommend reading our whitepaper "Effective workflow management with Apache Airflow 2.0". There, the most important concepts are explained in more detail and you get practical application ideas regarding the new features in the major release.

Concepts and ideas in Apache Airflow

To begin with, here is a summary of the main ideas of the workflow management platform. In Airflow, everything revolves around workflow objects. These are technically implemented as directed acyclic graphs (DAG). For example, such a workflow can involve the merging of multiple data sources and the subsequent execution of an analysis script. Airflow takes care of scheduling the tasks while respecting their internal dependencies and orchestrates the systems involved. Integrations to Amazon S3, Apache Spark, Google BigQuery, Azure Data Lake and many more are included directly in the official installation or are supplemented via production-ready contributions from the community.

The main functions of Apache Airflow are:

- Define, schedule, and monitor workflows

- Orchestrate third-party systems to execute tasks

- Provide a web interface for excellent visibility and management capabilities

A modern user interface

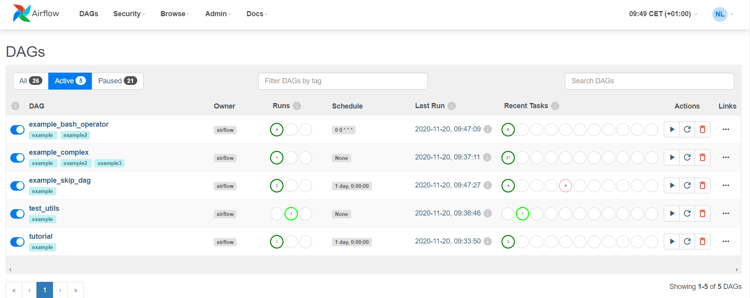

Unlike other open source tools, Apache Airflow's web interface is not a neglected companion. The graphical interface guides the user through administrative tasks such as workflow management and also user management. Numerous visualizations of the structure and status of a workflow and for the evaluation of execution times have always provided a good overview of the current status of workflow runs.

In Apache Airflow 2.0's new user interface with its lightweight design, rarely used functions recede into the background, making room for more clarity. For example, pausing, starting and deleting a workflow is possible directly from the start page, while detailed monitoring and code view move to a context menu. Nevertheless, long-time users will find their way around right away, as no serious changes have been made.

Another highlight of the graphical user interface is the auto-refresh option, which makes live monitoring much more pleasant.

Airflow API

The programming interface to Airflow can also be pleased about an upgrade. In the new version, it has outgrown its experimental status. Although the old interface remains active for the time being, it is worth taking a look at the new API thanks to many additional functions with consistent OpenAPI specification. Overall, all functions of the web interface, the experimental predecessor and typical functions of the command line interface are covered. During the development, security aspects were also in the focus and the rights management around the API and the web interface was unified.

Improved performance of the scheduler

An architecturally profound change in the new major release concerns the scheduler component. The scheduler monitors all workflows and the tasks within them and starts them as soon as the time is met and internal dependencies are fulfilled.

The community has long been calling for increased performance due to the high latency of short tasks. This is finally fulfilled. The new scheduler convinces with an enormous speed improvement and the possibility to run multiple scheduler instances in an active/active model. In this way, the availability and failover security have also been increased.

Optimize your workflow management

with Apache Airflow

Reusing parts of the workflow with TaskGroups

The reusable code parts provide for effectiveness in the programming and improve the maintainability. In the previous concept of SubDAGs, these advantages could be used, but only with negative effects on performance. Included workflow parts did not support parallel execution and were therefore used less often than intended. In Airflow 2.0 the same concept is available without disadvantages under the term TaskGroups.

Nested display of TaskGroups in the Airflow interface

Nested display of TaskGroups in the Airflow interfaceTaskFlow API

Workflows are easily created with the Python programming language. In addition to the definition of tasks and their dependencies (i.e. the concretization of the workflow itself), individual Python functions can also be executed as workflow steps. For this, the task is specified with the so-called Python operator.

If several Python operators are used one after the other, they are now better linkable and can more easily use the output of the previous function. The assignment of the output is done automatically in the background - even on distributed systems - and the task order is implicitly derived instead of being explicitly required.

Smart Sensor

In Apache Airflow, tasks are executed sequentially. There are cases when it makes sense to temporarily interrupt the execution of the workflow if certain conditions (e.g. data available) are not met. This is done by the so-called sensors. These check a wide variety of conditions at fixed intervals and do not continue the workflow until they are met. However, when used excessively, the sensors tie up a significant portion of the available resources in the Airflow Cluster with their frequent checks.

In the new Smart Sensors mode, the sensors are executed in bundles and therefore consume fewer resources. The early-access feature has already been extensively tested, but compatibility issues may arise in future releases if unplanned structural changes occur.

In addition to the changes presented, further steps have been taken to increase usability. For example, running Airflow using a Kubernetes cluster has been simplified and optimized.

Our Summary - Apache Airflow 2.0

There are many good reasons for using Apache Airflow 2.0 - use the potential of the new major release!

If you need support with the exact configuration or want to upgrade your existing installation, do not hesitate to contact us. We also pass on our knowledge in practical workshops - feel free to contact us at any time!

Machine Learning, Apache Airflow